New technology can lead to new ways of processing personal information that were not taken into account when the laws that govern NAV’s processing of personal information were made. The development and use of artificial intelligence requires the processing of large amounts of data — often personal information — which is compiled and analysed on a scale that is not possible by other means.

Clear legal authority is required for the development of artificial intelligence in the public sphere. Efforts are made to safeguard this aspect via requirements for clear statutory authority in the General Data Protection Regulation’s (GDPR) articles 5, 6 and 9, Article 102 of the Norwegian Constitution, and Article 8 of the European Convention on Human Rights, in addition to case law associated with these provisions.

In general concerning legal basis

The legal basis which is most appropriate to consider for NAV’s prediction model is Article 6 (1) (e). This provision states that personal information can be processed if processing is necessary in the exercise of official authority vested in the data controller. In addition, legal authority is required pursuant to Article 9, if special categories of personal data are processed. NAV's prediction model does this, particularly in regard to health information. NAV therefore applies Article 9 (2) (b), which provides a basis for processing special categories of personal information in the exercise of rights and obligations in social security law.

Both Article 6 (3) and Article 9 (2) (b) require a supplementary legal basis in national law. No explicit or specific statutory authority is required for the exact processing. The purpose of processing must be founded in national law or it must be necessary for the exercise of official authority.

Read Art. 6 GDPR.

The legal authority must nevertheless be sufficiently clear to ensure predictability for those affected and prevent arbitrariness in the exercise of official authority.

Read The Norwegian Constitution's Article 102 and ECHR Article 8.

This means that the law must define how the information can be used and set limits on the way in which the authorities are able to use the information. A specific evaluation must be made as to whether the provision is adequate for the processing in question. The more intrusive the processing, the clearer the statutory authority must be.

NAV’s supplementary legal basis

NAV expands on the supplementary legal basis in the National Insurance Act Section 8-7 a, seen in conjunction with Section 21-4 of the same Act and the Public Administration Act Section 17. In addition, NAV has the authority to process personal information in the Act relating to the Labour and Welfare Administration (NAV Act) Section 4a first paragraph.

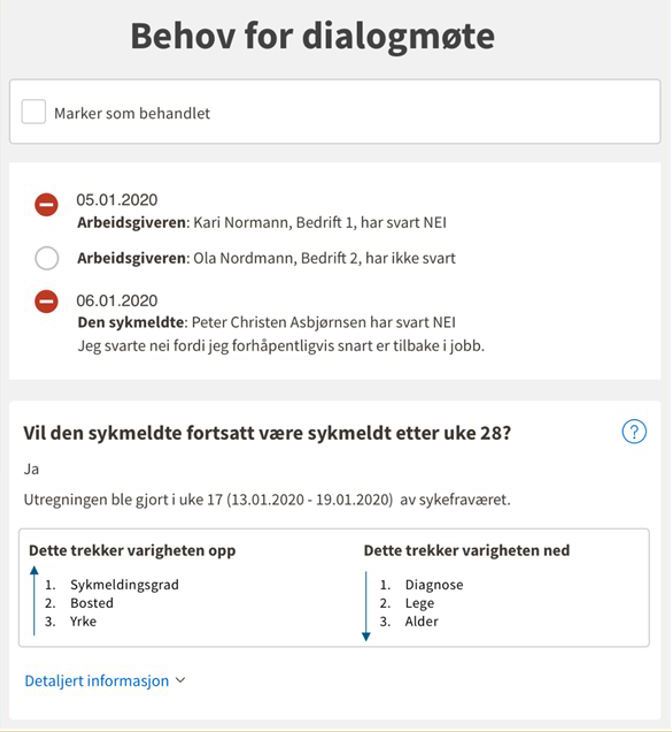

The National Insurance Act Section 8-7a regulates some of NAV’s obligations to follow up persons on sick leave. Section 8-7a second paragraph contains regulations pertaining to dialogue meeting 2 that must be held in week 26 of sickness absence—except “when such a meeting is assumed to be clearly unnecessary”.

The regulation must be viewed in context with the general regulation in the Act’s Section 21-4. This gives NAV general legal authority to collect information in order to exercise its duties. As an administrative agency, NAV is also covered by the general provision in the Public Administration Act Section 17. This requires that “the administrative agency shall ensure that the case is clarified as thoroughly as possible before any administrative decision is made”.

The development phase

It is natural to split the question of legal basis in two, based on the two main phases in an AI project; the development phase and the application phase. The two phases utilise personal information in different ways.

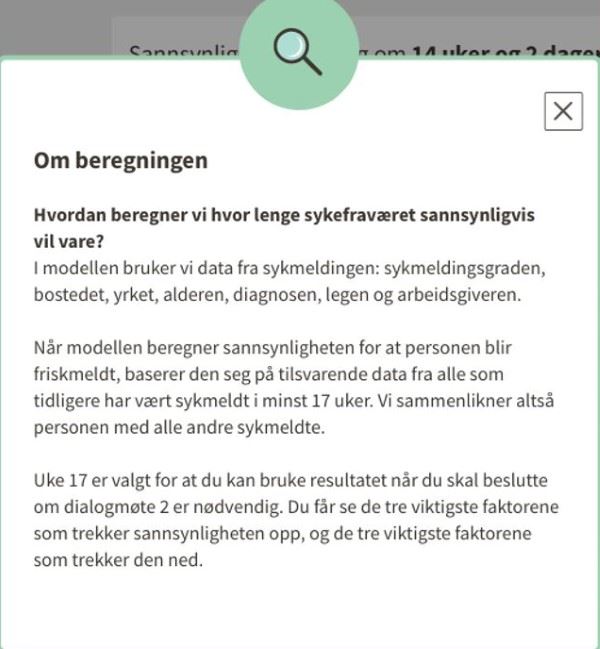

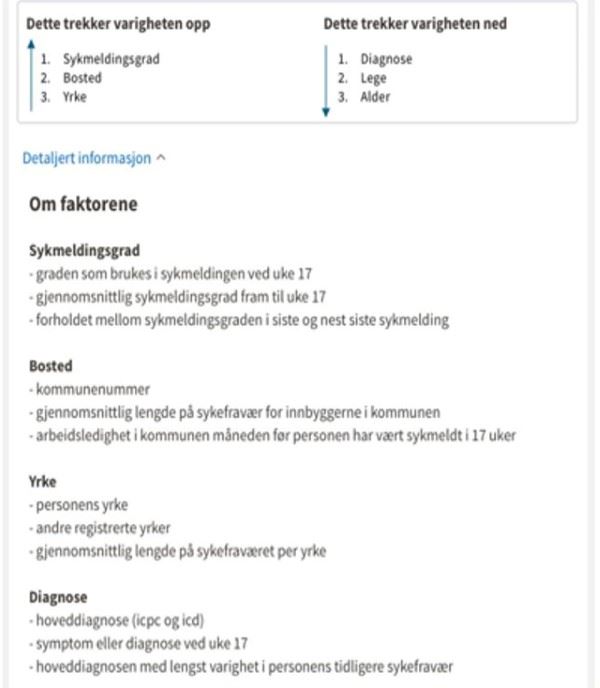

In the development phase, NAV uses a large amount of historical data—personal information concerning those previously on sick leave—from numerous registered individuals, to train a model that will predict the duration of sickness absence of other persons in the future. In the development phase, no personal information is used from people who will be followed up in the future.

The question will then be whether the relevant provisions of the Act (the National Insurance Act Section 8-7a and Section 21-4) that provide statutory authority to process personal information to evaluate whether it is clearly unnecessary to hold a dialogue meeting 2 in a specific case, also allow for the processing of personal information in connection with the development of an AI tool for use in case processing.

A natural interpretation of the wording indicates that these provisions do not provide such statutory authority. Compared with the current evaluations of dialogue meeting 2, the development of a prediction model will process a far larger volume of personal information belonging to persons that are no longer on sick leave. An important aspect is also that this information to a major degree will be special categories of personal information such as diagnoses, sickness absence history and information from the free text field in the medical certificate.

The invasive nature of the processing in the development phase also indicates that clear and explicit statutory authority must be required. It is doubtful whether the Social Security Act Section 8-7 (a), cf. Section 21-4 and the Public Administration Act Section 17 are sufficiently specific to represent a clear and explicit supplementary legal basis according to Article 6 (1) (e) and Article 9 (2) (b). It is not sufficiently evident in the statutes applied by NAV as supplementary legal basis, that information from previous users can be used in the development of artificial intelligence.

The application phase

For the application phase, NAV has carried out a thorough evaluation of the supplementary legal basis for use of the prediction model as support in decision-making. The evaluation expands on the supplementary legal basis in the National Insurance Act Section 8-7a, seen in conjunction with Section 21-4 of the same Act and the Public Administration Act Section 17. In addition, NAV has the statutory authority to process personal information in the NAV Act Section 4a first paragraph.

NAV has found that no special statutory authority is required for the method itself, including the use of the prediction model; however, an evaluation must be made as to whether the method is proportional, in order to determine whether the person on sick leave should be called in to dialogue meeting 2, or not.

Decisive for this evaluation is whether the use of the prediction model can be considered to be more intrusive for the user. Moreover, an evaluation has been made as to whether the planned use of personal information, both in terms of volume and how the information is used, can be considered necessary in order to comply with the requirements of the Act.

NAV has concluded that the processing of personal information is both proportional and necessary in order to achieve the objective and will therefore have a supplementary legal basis for using the prediction model as support for decision-making in the application phase itself, provided it has a legal basis for the development.

Conclusion concerning legal basis

In our assessment, NAV may have a legal basis for processing personal information in using AI in this context. However, it is doubtful whether the legal basis stated by NAV can represent a legal basis for using personal information to develop a prediction model — even though the model will later be able to contribute to better follow-up of persons on sick leave. A legal basis for development and the associated processing of personal information is a prerequisite for NAV to use the prediction model as support for decision-making in decisions that concern whether dialogue meeting 2 shall be held.

It could be argued that there are societal benefits in NAV developing artificial intelligence to improve and increase the efficiency of its work. At the same time, the development of artificial intelligence is a process that challenges several important personal data protection principles. In order to safeguard the rights of registered persons, clear and explicit statutory authority in laws or regulations for this type of development will be necessary. A legal process, with the associated consultations and reports, will help to ensure a democratic foundation for the development and use of artificial intelligence in public administration.

The conclusion above is based on discussions held between the Data Protection Authority and NAV in the sandbox project and is therefore for guidance only, and not a decision by the Data Protection Authority. The responsibility for evaluating the legal basis for the relevant processing lies with NAV as the data controller.

Automated decision-making processes

Even if processing is legal, GDPR gives the registered person the right not to be a subject of automated, individual decision-making, i.e. decisions taken without human intervention, if the processing produces legal effects concerning him or her or similarly significantly affects him or her. The human involvement must be genuine and not fictive or illusory.

Read the GDPR Article 22 (1) and p. 20-21 in the Article 29 Data Protection Working Party's “Guidelines on Automated individual decision-making and Profiling for the purposes of Regulation 2016/679”

If the prediction model is used only as support for decision-making, the prediction concerning the length of sickness absence will be one of multiple elements in the NAV adviser’s assessment of whether the person on sick leave will be called in to a dialogue meeting. In such a case, the human evaluation will mean that the processing is not defined as fully automated. However, it could be reasoned that the decision in practice is fully automated. The advisers’ workload and knowledge of the algorithm, and the perceived and actual accuracy of the predictions, will influence the risk that the person in the loop—the adviser—will accept any results generated by the prediction model more or less without thinking.

There are various measures that can mitigate this risk. Good routines and training of advisers will be fundamental. The information they receive in connection with the use of the tool must be comprehensible and allow them to evaluate the prediction against other aspects. In addition, routines must be introduced to reveal whether decisions are fully automated.

Admittedly, in the longer term, NAV wishes to fully automate the process of convening dialogue meeting 2. There are exceptions to the prohibition against fully automated decisions; however, this assumes that the decision does not “produce legal effects concerning him or her or similarly significantly affects him or her”. So, does the model produce these effects?

See Art. 22 GDPR.

NAV's prediction model, which will estimate the length of sickness absence, involves profiling and is an automated process. If the model in actuality is used as support for decision-making, the decision itself is not automated. It is the decision on whether or not to convene a dialogue meeting that has the potential to produce legal effects for or similarly significantly affects the registered person—not the prediction itself.

The question is thus whether the invitation to dialogue meeting 2 has legal effects or similarly affects the user. A decision has ‘legal effects’ if it affects the person’s legal rights, such as the right to vote or has contract law-related effects. An invitation to a dialogue meeting is not encompassed by this. Thus what remains is to evaluate whether the decision concerning a dialogue meeting significantly affects the user similar to a legal effect.

The answer is yes, if the decision has the potential to affect the individual’s circumstances, conduct or choices, has a long-term or permanent effect, or leads to exclusion or discrimination. Decisions that influence the financial circumstances of a person, such as access to health services, can be considered an effect similar to a legal effect.

See p. 21-22 in the Article 29 Data Protection Working Party's “Guidelines on Automated individual decision-making and Profiling for the purposes of Regulation 2016/679”

A decision concerning dialogue meeting 2 is not an individual decision; however, an argument can be made that it ‘significantly affects’, and in a fully automated version will fall within the scope of Article 22. In the case of a public sector activity, the scope of Article 22 takes in more than solely individual decisions, a view that is supported by the preparatory works for the new Public Administration Act. What exactly falls within ‘legal effect’ or ‘similarly significantly affects’ must be evaluated substantively, based on the consequences the decision has for the registered person. For NAV’s prediction model, a distinction might be envisaged between a situation in which a dialogue meeting 2 is convened, and one in which no meeting is convened.

If the person on sick leave is not convened to a dialogue meeting, no obligation arises for the person concerned. However, the person on sick leave retains the right to request a dialogue meeting. In such situations, the decision will have a less invasive effect on the registered person, as long as the possibility of requesting a dialogue meeting remains genuine. At the same time, dialogue meeting 2 is designed to help the person on sick leave to return to work. Not all persons on sick leave will have the resources to assert the right to request a dialogue meeting. This can perhaps be partially safeguarded by providing good information to registered persons.

In situations where a person on sick leave is called in to dialogue meeting 2—which is the principal rule according to the National Insurance Act Section 8-7a—an obligation will arise for the person on sick leave to attend the meeting. Failure to comply with this obligation will—ultimately—lead to the termination of sick pay. In such cases, the obligation to attend dialogue meeting 2 will potentially have a major effect on the person on sick leave and may fall within the scope of Article 22.

Summarised briefly, decisions to convene a dialogue meeting may reach the threshold in Article 22, which triggers a prohibition. Decisions to not convene a meeting may fall short of the threshold, provided that the right of the person on sick leave to request a dialogue meeting is genuine. Whether it is possible in practice to separate the decisions in this way, will be a matter for NAV to consider.