About the project

NAV has a hypothesis that there are too many unnecessary meetings, and that the meetings steal time from employers, sickness certifiers (e.g. doctors), the person on sick leave and NAV’s own advisers. This was the motivation for establishing the AI project which was to address predictions of the duration of sickness absence.

These meetings represent one of several legally-decreed waypoints in NAV’s following up of sickness absence. Within seven weeks of sickness absence, the person on sick leave and the employer must hold a dialogue meeting. After eight weeks, NAV is required to check whether the person on sick leave is in activity, or whether he/she can be exempted from the activity requirement. And before sickness absence passes 26 weeks, NAV is required to evaluate the need for a further dialogue meeting with the person on sick leave, the employer and the sickness certifier. As early as in week 17, NAV must establish whether a new dialogue meeting will be necessary, i.e. whether the person on sick leave will be declared fit for work within week 26 or not. At each of the waypoints, NAV evaluates which type of follow-up the person on sick leave requires.

This project is based on the waypoint after 17 weeks of sickness absence and the decision to convene dialogue meeting 2. By using machine learning to predict the length of sickness absence, NAV wishes to support the adviser’s decision regarding the necessity of holding dialogue meeting 2. The hope is to:

- Reduce time spent on assessing the need for a dialogue meeting for NAV personnel working with sickness absence.

- Save time for the parties involved in sickness absence, by a greater degree avoiding the need to convene unnecessary dialogue meetings.

- Provide better follow-up for persons on sick leave who require a dialogue meeting, by concentrating efforts on those who actually need it.

Presentation

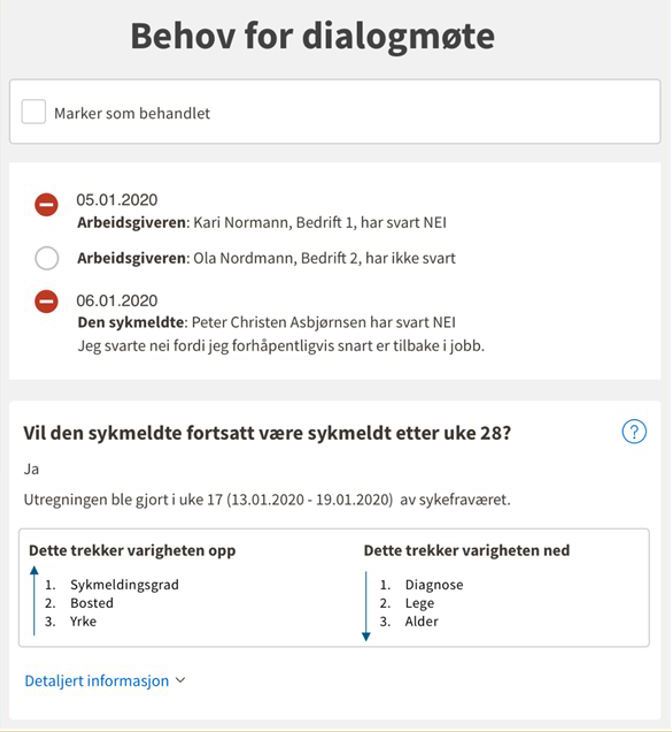

This screenshot shows an early example from NAV of how a recommendation from the system could be presented to the adviser who will take the final decision on whether a dialogue meeting should be convened or not. The response is founded on three factors that indicate a longer duration and three that indicate a shorter duration. The NAV adviser also receives information about how employers and persons on sick leave evaluate the need for a dialogue meeting.

Sandbox objectives

NAV came to the sandbox with an AI tool more or less ready for use and having conducted thorough legal evaluations. This paved the way for the sandbox project to be more of a quality assurance of work performed than a joint innovation process. The overarching objective for the sandbox project was to help to create practices for how NAV can ensure control and responsibility over the course of an AI development process.

The project will:

- Clarify NAV’s scope for utilising AI where doing so is legal and responsible.

- Shorten the path from idea to implemented AI in other areas in NAV and for other enterprises that see the potential in similar AI applications.

In other words, the project can be useful and have transferable value for NAV in general, but also for other enterprises, especially in the public sector.

In the sandbox we have discussed problem issues associated with legal basis, i.e. whether NAV has the right to use machine learning as planned. In addition, we have discussed the fairness of the model, including how discrimination can be revealed and counteracted in such a model . Finally, we have looked at the requirements for a meaningful explanation of the model, at both a system and individual level.

Problem issues

The work in the sandbox has revolved around three problem issues associated with AI:

- Legal basis

- Fairness

- Explainability

In the first section we examine the legal challenges associated with NAV's legal basis, i.e. the legality of processing personal information to develop and use a machine learning model. The second section is an assessment of NAV’s approach to the requirement that this type of model must be deemed to be fair, and in the final section we discuss issues of transparency and how the function and outcomes of the model can be explained.