How to identify algorithmic bias?

Artificial intelligence has great potential for improving diagnostics and treating disease. At the same time, there are several examples of artificial intelligence largely perpetuating and reinforcing existing social discrimination. The sandbox project wanted to explore how potential bias in the EKG AI algorithm could be identified.

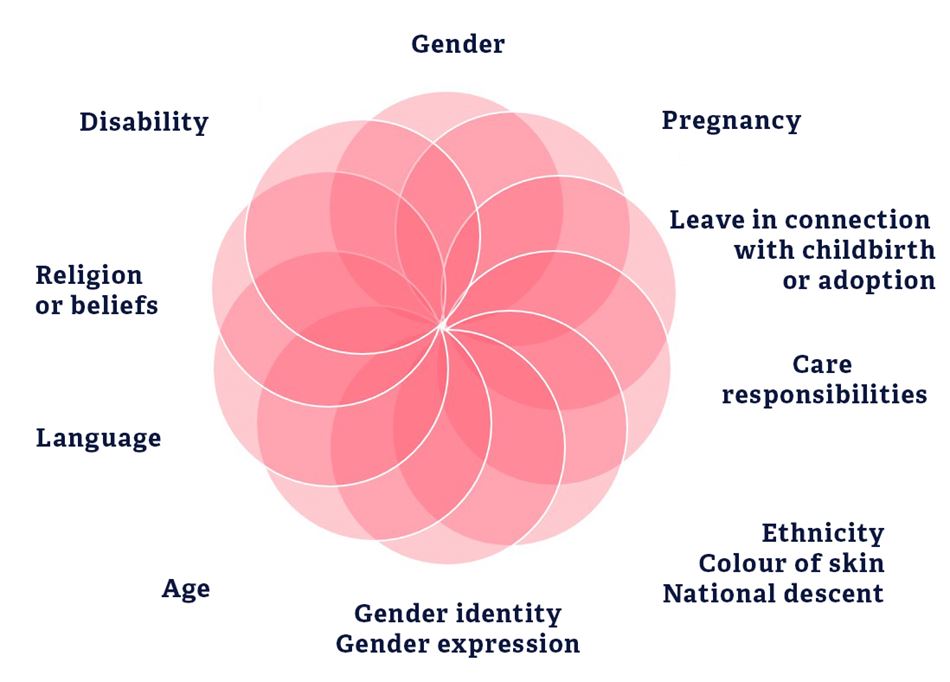

To ensure a fair algorithm, we wanted to see if EKG AI would give less accurate predictions for some patient groups. In consultation with the LDO, we used the bases of discrimination13, as defined in Article 6 of the Equality and Anti-Discrimination Act, as our starting point. We learned that sometimes it is not sufficient to look at these bases of discrimination in isolation. We also need to cross-check them, such as in “minority woman”.

Definition of discrimination

In order for differential treatment to be considered discrimination pursuant to the Equality and Anti-Discrimination Act, it must be based on one or more bases of discrimination.

Read more about bases of discrimination on the Anti-Discrimination Tribunal website.

In Norway, we have free universal health care, which is a good starting point for a representative data source with a wide variety of patients. There may still be situation, however, where certain patient groups have not had the same access to a specific treatment, which could give rise to bias in the algorithm. In this project, we chose to explore whether EKG AI’s predictions varied in accuracy for patients with a different ethnic background. One of the reasons why we chose to focus on discrimination on grounds of ethnicity, was that there are several examples of algorithms that systematically discriminate against ethnic minorities. From a health perspective, patients with different ethnic backgrounds can have different symptoms of heart failure, variations in EKG readings and blood tests. In this context, it is important to point out that the term “ethnicity” is used as a reference for the patient’s biological or genetic origin.

The challenge for Ahus is that there is limited or no data on the patient’s ethnicity. When Ahus does not have access to this information, it will not be possible to check whether the algorithm makes less accurate predictions for these patient groups. In order to determine whether such bias exists, Ahus will have to conduct a clinical trial. In such a trial, information about ethnicity may be collected based on consent, and it will be possible to see whether ethnic minorities systematically end up in a worse position than the majority of patients that the algorithm has been trained with.

To check for bias in EKG AI, a limit value for statistical uncertainty must be defined for the algorithm. As prejudice and discrimination will always exist in the real world, algorithms will also include errors and biases. The most important question has therefore been to determine where the limit is for unacceptable differential treatment in EKG AI.

Wanted vs. unwanted differential treatment

The main purpose of EKG AI is to prioritise patients with active heart failure over those who do not have heart failure. This is a form of differential treatment. The determining factor in an assessment pursuant to anti-discrimination legislation is whether the differential treatment is unfair.

In order to define such a limit value, one must conduct a clinical trial that explores whether the algorithm makes less accurate predictions for certain patient groups based on one or more bases of discrimination. If it turns out, for example, that EKG AI prioritises patient groups on the basis of ethnicity rather than medical factors, such as EKG, diagnosis codes, etc., this will be considered unfair differential treatment pursuant to the legislation.

When checking whether the algorithm discriminates, it will normally require the collection and processing of new personal data. A new purpose therefore requires a new assessment of the lawfulness of the processing pursuant to Article 6, and potentially Article 9, of the GDPR.

It is noteworthy that the European Commission, in Article 10 (5) of the Proposal for a Regulation laying down harmonised rules on artificial intelligence (Artificial Intelligence Act), would allow the processing of special categories of personal data, such as health information, to the extent that it is strictly necessary for the purposes of ensuring bias monitoring, detection and correction. If this provision is adopted, it would provide a legal basis for, in certain situations, examining whether algorithms discriminate when processing special categories of personal data.

The data minimisation principle established by Article 5 of the GDPR would still limit the types of personal data that could be processed. The principle requires that the data processed be adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed. The requirement of necessity also includes an assessment of the proportionality of the processing. When assessing whether Ahus should collect and process information about the patients’ ethnicity, we have therefore deemed it proportional in the context of the consequences a potential bias may have for the individual patient.

The predictions from EKG AI are only one of many sources of information for how health personnel assess the patient’s treatment. This means that the consequences of a potential algorithmic bias would have minimal potential for harm. If the algorithm, for example, fails to detect heart failure in a patient (false negative), the heart failure could still be identified with an ultrasound of the heart and subsequent blood tests. Ahus must therefore ask whether the collection and processing of information about ethnicity, which is considered a special category of personal data, constitutes proportionate processing when the goal is to identify potential algorithmic bias.

There are no clear answers to how such a limit is to be defined. In some cases, it will only be possible to say whether the processing was necessary to identify discrimination after the information has already been collected and processed. In a medical context, however, it is often as important to identify what is not relevant, as what is relevant for sound medical intervention.