How should information be tailored to the patients?

Transparency is a fundamental principle in the GDPR. Transparency about the processing of personal data may help to uncover errors and unfair discrimination. It contributes to trust and enables the individual to uphold their rights and protect their own interests.

Providing the patient with information is a fundamental precondition for effective medical assistance. In many cases, the requirements set out in data protection and health-related legislation will conform and complement each other. Nevertheless, there are some differences that we will illuminate here. When using a clinical decision-support system, the clinician will play a key role in providing relevant information to the patient.

Transparency in the development phase

Article 13 and Article 14 of the GDPR require the data controller to inform the data subject when their personal data are collected and used in connection with the development of algorithms. Article 13 applies to data collected from the data subjects themselves, while Article 14 regulates cases where the data are collected from other sources, such as third parties or publicly available data.

In this project, the personal data will be collected from sources other than the data subject. The data are obtained from the patient records system and have been generated by the hospital through diagnoses, registration of admissions, etc. The information that the patient must receive is therefore regulated by Article 14.

The data used concern person-specific admission records from almost 400,000 patients. From these, the following data are extracted for training purposes:

- gender

- age

- no. of bed days

- previous readmissions

- primary and secondary diagnoses

- length of hospital stay

Obtaining this information individually can be a resource-intensive process.

Article 14(5)(a to c) of the GDPR establishes certain exemptions from the general rule. An exemption may be made if the data subject already has the information, the provision of such information proves impossible or would involve a disproportionate effort. The same applies if obtaining or disclosing the data is expressly laid down by EU or member state law to which the controller is subject and which provides appropriate measures to protect the data subject’s legitimate interests.

It is worth mentioning here that Section 29 of the Health Personnel Act establishes that a duty to submit an application to the Norwegian Directorate of Health, requirements relating to data minimisation and a duty of confidentiality are appropriate measures.

In this project, Article 14(5)(b and c) may be applicable during the development phase.

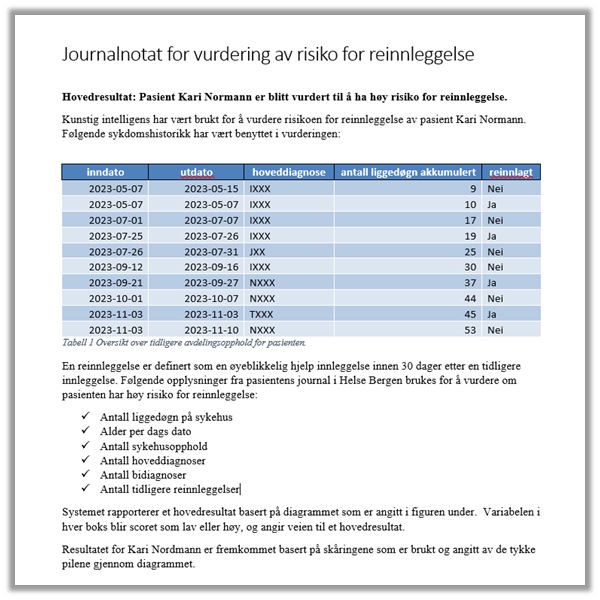

However, it must always be clear to the individual data subject that their data are being used for the development of AI, cf. Article 12(1) and the general principle of transparency laid down in Article 5(1)(a) of the GDPR. This may be achieved through the publication of general information concerning data processing at publicly available areas, such as the entity/data controller’s website. This is especially important in order to build trust when new technologies, such as AI, are used. In keeping with the transparency principle, Helse Bergen will, when the algorithm is used, present the underlying data, the result and any uncertainties relating to the individual patient in an entry in that patient’s medical records, which the patient can view at www.helsenorge.no.

What information must be provided in the development phase?

Patients should receive general information that patient data are being used to develop AI tools. It is therefore important that this information is available to the data subject before any further processing takes place.

When personal data are processed in connection with the development of AI systems, it is particularly important that information on the following is provided:

- Which types of personal data are processed.

- The purpose for which the algorithm is being developed.

- What happens to the data once the development phase has finished.

- Where the data are obtained from.

- The extent to which the AI model processes personal data and whether measures have been initiated to anonymise the data.

Information relating to the right to object to personal data being used for development

AI is a new technology and there may be many reasons for why patients do not want their health data to be used for the development of AI tools. Patients should be informed in general terms that they have the right to object to their health data being used for the development of AI tools. This information may, for example, also be disclosed on the entity/data controller's website.

The right to object to one’s own health data being used in an AI tool is enshrined in Section 7 of the Medical Records Act, cf. Section 17, and Section 5-3 of the Patients’ Rights Act.

The right to object under Article 21 of the GDPR does not apply to treatment-oriented health registries authorised pursuant to Article 6(1)(c).

Transparency in the application phase

In the application phase, the information that must be supplied will depend on whether the AI model is being used for decision support or for fully automated decision-making.

For automated decisions which have a legal effect or significantly affect a person, specific information requirements apply. If processing can be categorised as automated decision-making or profiling pursuant to Article 22, there are additional requirements for transparency, cf. Article 13(2)(f) and Article 14(2)(g). This includes:

- Information that the data subject is the subject of an automated decision.

- Information that they have the right not to be subject to an automated decision under Article 22.

- Meaningful information about the AI system's underlying logic.

- The significance and expected consequences of being subject to an automated decision.

Although it is not an explicit requirement that the data subject be provided with supplementary information when the AI system is used as a decision-support tool, the Norwegian Data Protection Authority recommends that supplementary information be provided in such cases, particularly when health data are used for the purpose of profiling. This is supported by Article 14(2)(g), together with Article 12(1) and Recital 60. It is also in line with the guidelines published by the European Data Protection Board (EDPB), see page 25, which also provides guidance on what a meaningful explanation of the logic may contain.

A meaningful explanation will depend not only on technical and legal requirements, but also linguistic and design-related considerations. The intended target group for the explanation must be assessed. In this case, the target groups will be healthcare personnel and patients.

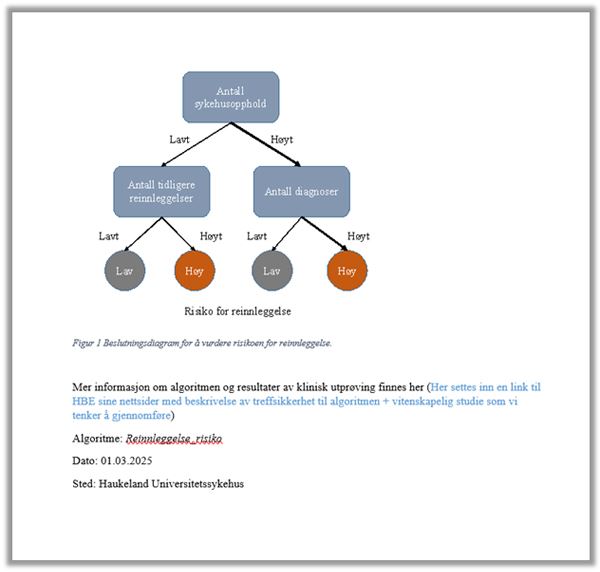

In many cases, a complex mathematical explanation of how the algorithm and machine learning works will not be the right approach to take. Rather, the data controller should focus on the information being clear and intelligible for the data subject. For example, the controller may disclose:

- The categories of data that have been or will be used in the profiling and decision-making process.

- Why these categories are considered relevant.

- How a profile used in the automated decision-making process is constructed, including any statistics used in the analysis.

- How it is used in a decision that affects the data subject.

Such information will generally be more relevant for the data subject and contribute to greater processing transparency. It may also be useful to consider visualisation and interactive techniques to augment transparency about how the algorithm works.

This information will also be useful for healthcare personnel, helping to build their confidence in the system and ensuring a genuine evaluation of its output.

Other requirements concerning the disclosure of information about the reasons for automated decisions may also apply to public sector entities. Such requirements may, for example, be found in the Public Administration Act or sector-specific legislation.

Transparency in the continuous machine learning phase

In connection with the use of personal data collected during the application phase for continuous machine learning, the requirement to provide information will largely be the same as the requirements in the initial development and application phases.

In this solution, the proposal is for periodic rounds of machine learning, in which case the requirements will be the same as for the development phase.

What information requirements are laid down in the healthcare legislation?

Healthcare personnel have a statutory duty to provide patients with information, and patients have a right to receive information that can fulfil the transparency requirement.

Section 4-1 of the Patients’ Rights Act establishes that consent to medical assistance is valid only if the patient has received the necessary information about their own medical condition and what the medical assistance entails. The same may be deduced from Section 4 of the Health Personnel Act concerning the requirement to provide adequate and caring medical assistance.

Furthermore, it follows from Section 3-2 of the Patients’ Rights Act that the patient must have the information necessary to obtain an understanding of their own medical condition and what the medical assistance being provided entails. The patient must also be informed about potential risks and side-effects.

These duties mean that information must be provided to the extent necessary for the patient to understand what the medical assistance provided entails.

Our assessment is that the decision-support tool which Helse Bergen has developed does not represent a high risk to the data subject’s rights and freedoms, and must be treated in the same way as other technical and medical aids (blood pressure monitor, X-ray machine, blood tests, etc.) with regard to the information that must be provided. Information will be provided and assessed by healthcare personnel, who will also make the final decision.

Proposal concerning medical record entries

A meaningful explanation will depend not only on technical and legal requirements, but also linguistic and design-related considerations. An evaluation must also be performed of which target groups the explanation is aimed at, which may mean different explanations being given to healthcare personnel and patients. If the explanations appear to be standardised, it could undermine their significance for the decision-making process. Social factors such as trust in the entity, the significance of the score and trust in AI systems in general may also influence whether an explanation is perceived as being meaningful.

Information given to patients by healthcare personnel must therefore be personalised. This may also be relevant for medical record entries in order to communicate the information in an intelligible fashion. Here are some examples of how medical record entries may be designed: