The background to this question was twofold. 1. Federated learning requires all those involved to have the same datapoints in the same format. Finterai wanted to discuss how much leeway it had to facilitate such a standardisation without assuming the responsibilities of data controller.

Second, there is a slight possibility that the models contain personal data. Finterai therefore wanted to discuss what consequences this might have for the company when they check the quality of the models.

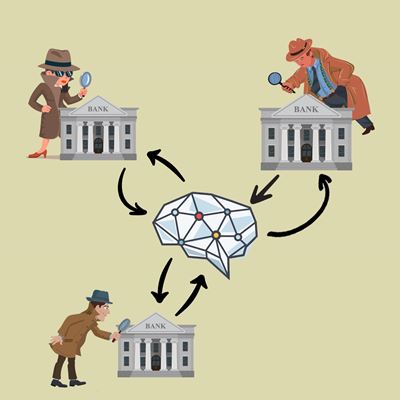

In the sandbox, we jointly identified various personal data processing actions that occur in or are presumed by Finterai’s processing. We selected three different processing activities to examine in more detail:

- The standardising of transaction data

- The collection of third-party data

- The checking of vulnerabilities in the model

We elected to examine these processing activities in further detail because they are key processes in, or are presumed by, Finterai’s solution, and because they are processes that are also relevant for comparable enterprises or those with an obligation under the Anti-Money Laundering Act.

The Norwegian Data Protection Authority has not come to a conclusion on the roles Finterai and the banks play respectively with regard to the three selected processing activities. This is because we have not taken a position on what could potentially be the legal basis for Finterai or the banks’ processing of personal data. The discussions in the sandbox have therefore primarily sought to identify relevant factors in the assessment of roles, based on specific processing activities, and determine the direction in which the various factors lean. We emphasise that the Data Protection Authority’s role assessments are merely advisory. Finterai and the banks must themselves come to a conclusion about their own roles on the basis of an overall assessment of the actual circumstances.

1. Standardising transaction data

To make the banks’ local data compatible with Finterai's federated learning solution, the data must be standardised and structured before they can be used. Finterai provides a software solution that uses AI-driven, natural language processing. The objective of the software is to standardise data, including SWIFT messages, so that they have the same format as equivalent data from other banks which also want to participate in the federated learning process.

In the sandbox, we discussed roles relating to the standardising of SWIFT messages. However, the factors to be considered are also relevant for an assessment of roles relating to the standardisation of other categories of data, such as KYC information and third-party data.

The most relevant purpose for standardising data in this context will be to adapt the data’s format so they can be used in a federated learning process across the participating banks. Each bank decides for itself whether it wants to use Finterai’s federated learning service. Furthermore, each bank decides for itself which method it wants to use to convert the data to the format required for participation. It is thus not a requirement that they exclusively use Finterai's standardisation software.

Furthermore, the software is installed and run within the bank’s own IT environment, without Finterai or the other participating banks having access to the data being standardised. The software’s algorithms are also trained internally at the individual bank, and it is the bank itself which is responsible for this process. The result of the training will not be shared with Finterai or the other banks which use the same software.

The factors highlighted above indicate that each bank has a decisive influence over both the decision to employ federated learning as part of their anti-money laundering endeavours and the means it will use to achieve this end.

In our sandbox discussions, we have not identified any purpose related to the banks’ use of the standardisation software where Finterai has a decisive influence. As previously mentioned, Finterai will not have access to the personal data processed by the banks or the results of the processing (standardised data or learning from AI-driven natural language processing).

On this basis, the Norwegian Data Protection Authority deems it highly unlikely that Finterai has a decisive influence over the purpose for or means by which the data is processed. In that case, Finterai will not be accorded the status of data controller. Since Finterai does not process personal data on behalf of the banks, it will not have the status of data processor either.

2. Collecting third-party data

It is not merely the data’s format that must be the same across the entities wanting to use federated learning. The data categories used must also be the same. This is to enable the banks to access the same type of data and to allow input data to be interpreted by the model.

To ensure that all the banks using the service have access to the same data categories, Finterai must establish a framework for the types of data that may be used in the federated learning system. In addition to data from SWIFT messages and KYC data, which are obtained from the customers or, potentially, the transaction counterparties, Finterai requires the banks to collect what it calls third-party data. This will often contain personal data.

In the sandbox, we discussed what consequences it may have for Finterai’s role that it defines the data categories which participants in the federated learning process must have access to. We have presumed that the banks are already in possession of the SWIFT messages and KYC data. The discussions have therefore primarily related to the collection of third-party data.

The purpose of standardisation is to ensure that the participating banks have access to the same type of data. However, there is another purpose which is crucial for the choice of data categories, namely that the banks should be able to build up and train models that are well suited to uncovering suspicious transactions.

It is the banks that are responsible for complying with the anti-money laundering regulations. Finterai has no independent responsibility under this regulatory framework. As discussed in the paragraphs concerning the standardising of transaction data, it is the banks themselves that have decisive influence over whether they use Finterai's federated learning solution as part of their anti-money laundering endeavours. There is no requirement for the banks to use Finterai’s software for the collection of third-party data.

Each bank decides for itself whether it wants to use Finterai’s service and therefore which categories of third-party data it must subsequently collect, and by what means. If a bank disagrees with Finterai about the data categories that need to be collected for this purpose, it can choose not to use Finterai’s solution as part of its anti-money laundering endeavours. In other words, it can be argued that it is the individual bank which has decisive influence over the means to be used to achieve the intended purpose.

Data categories may be said to be essential means (which and whose personal data are to be collected), or the “core” of how the purpose is to be achieved. Furthermore, the data will not be shared with Finterai. Who has decisive influence over which categories of third-party data that must be collected (means) in order to train models that are well suited to uncovering suspicious transactions (purpose) is an important factor in the assessment of roles.

The factors that have been highlighted above indicate that it is the individual bank itself that has decisive influence over the purpose and means of third-party data collection. We have, however, also looked at any self-interest that Finterai may have in the decision about which data categories are to be used in the solution.

In the assessment of roles relating to the standardising of transaction data, we emphasised that Finterai will have access neither to the personal data being processed nor the results of that processing. With regard to the collection of third-party data, Finterai will have no access to this type of personal data, although it will have access to the models that have been developed with the help of that personal data.

These models will also be available to all the banks participating in the federated learning process. It could be argued that the better these models are, the more attractive Finterai’s service will probably become. The banks’ access to models that are adequate and effective in their anti-money laundering endeavours could therefore be perceived as promoting the sale of Finterai's service, thereby giving the company a commercial advantage. The purpose of choosing which data categories to include in the service is, however, to enable the banks to build effective AI models with which to fulfil their obligations under the anti-money laundering regulations.

Any purely commercial advantage that Finterai may be imagined to derive here is, pursuant to the European Data Protection Board's guidelines, not in itself sufficient to qualify as a purpose with respect to processing. This would imply that Finterai does not have decisive influence over the purposes mentioned above, with the result being that data controller responsibility is not triggered on Finterai’s part.

Read the European Data Protection Board’s guidelines relating to the roles of data controller and data processor (07/2020)

It is also important to consider whether there is a legal basis for the collection and use of third-party data in this way before the data are collected and used. Although the matter of legal basis is not addressed as a separate topic in this report, we would nevertheless like to remind readers of it. The data minimisation principle must also be considered with respect to the collection and use of third-party data. This will be discussed below in the chapter on data minimisation.

3. Checking for vulnerabilities in the model

The final processing activity we discussed relates to the checks that Finterai must perform in connection with the execution of federated learning.

The models shared between the banks and Finterai are, in principle, intended to be devoid of personal data. There is nevertheless a certain risk that personal data on which a model has been trained could be extracted if the model has vulnerabilities. This is referred to as data leakage and means that it is possible to re-identify individuals. Such vulnerabilities may arise if an error has occurred during the training process.

Finterai plans to perform a variety of checks on the models to be trained using federated learning. One check that all the models must undergo after training and before being shared with other participants is meant to uncover any probability of data leakage from the model. If Finterai's check uncovers vulnerabilities that could lead to data leakage, processing of the model in question could be deemed the processing of personal data.

There is reason to assume that such errors will arise from time to time. The question raised in the sandbox was Finterai's role in the event that it, in practice, ends up processing personal data due to errors made by the banks.

The purpose of vulnerability checks is to prevent models containing personal data from slipping into the federated learning system. It might be thought that each bank could perform this check itself before sending the model to Finterai. However, there are good reasons for this checking process to be performed by Finterai. This includes avoiding quality control being dependent on the competence of the individual bank.

Finterai has disclosed that any models with vulnerabilities will be deleted and the bank from which the model came notified. By checking the models for weaknesses, and excluding models containing errors, Finterai is helping the banks to prevent personal data from going astray.

On the basis of the above, we consider it likely that Finterai will become the data controller for any personal data uncovered in the checks performed. In truth, the checks must be said to have been performed on behalf of the bank with which the model last trained, while Finterai acts as data processor for the banks to the extent that personal data are processed as part of the checks. It would be natural to include guidelines in the data processor agreement setting out the measures Finterai and the banks must implement if an error occurs and Finterai gains access to personal data.

For models that do not contain personal data, neither the checks performed, nor the further sharing of the models will constitute processing in the meaning of the GDPR, since personal data are not processed. The GDPR will therefore not apply in such cases. If there is any doubt about whether or not the model contains personal data, it should be treated as though it did contain personal data and the GDPR were applicable.