Summary

Finterai is a Norwegian start-up that wants to tackle a societal problem that has previously caused far larger organisations many a sleepless night, namely money laundering and the financing of terrorism. Banks are required to do what they can to prevent it, but struggle to do so in an effective manner.

The crux of the problem is that each individual bank has “too few” criminal transactions to provide a sufficiently reliable indication of what actually distinguishes a suspicious transaction from a run-of-the-mill one. As a result, the banks’ electronic surveillance systems flag far too many transactions (false positives), which trigger a time-consuming and costly manual follow-up investigation. The problem could potentially be resolved by building systems based on more data than currently exists. The challenge is that banks cannot share the necessary data among themselves since transactions contain personal data.

Can federated learning solve the conundrum?

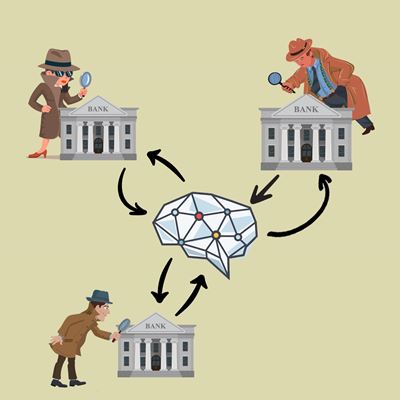

Finterai wants to resolve this data-sharing problem by applying a relatively new method in the field of machine learning, called “federated learning”. Federated learning is a decentralised methodology within artificial intelligence (AI), which is deemed more privacy friendly than many other forms of machine learning. By using this method, banks can learn from each other without actually sharing data about their customers.

In the sandbox project, we have explored three questions that federated learning raises in relation to the data protection regulations. This has led to the three conclusions presented below.

Conclusions

- Processing responsibility: The banks themselves will always have a decisive influence over both the purpose of and the means for the processing activities discussed in this report and will therefore be the data controller. Finterai will probably not act as data controller for the activities, although a more detailed assessment of the legal basis and all the factual circumstances will be required before this can be conclusively determined. Finterai’s task will be to monitor the models’ vulnerabilities and ensure that they do not contain personal data. The company will therefore probably act as the banks’ data processor.

- Data minimisation: Each bank’s customer risk profile affects the requirements it must meet under the anti-money laundering regulations. This includes how much data must be collected on the bank’s customers. It can therefore be challenging to standardise the data categories that every bank must always have access to in order to participate in the federated learning process, while also upholding the principle of data minimisation. Nevertheless, we do not rule out the possibility of identifying some data categories that the banks may always be required to have access to. However, to meet the requirement for data minimisation, the system should be rigged in such a way that the banks can hold off from obtaining personal data until they know for sure that they will have a use for them.

- Security challenges: Use of federated learning has both strengths and weaknesses when it comes to information security and the protection of personal data. Federated learning reduces the need for data sharing. At the same time, it is a relatively new method. The solution makes extensive use of cloud services, which requires data security competence, but also ensures that the participating organisations can largely use their own capabilities and resources to secure their part of the solution. A potential attack vector related to federated learning is the model inversion attack, the purpose of which is to reconstruct (personal) data, based on access to trained models. The risk of this is deemed to be low but also difficult to evaluate.

What is the sandbox?

In the sandbox, participants and the Norwegian Data Protection Authority jointly explore issues relating to the protection of personal data in order to help ensure the service or product in question complies with the regulations and effectively safeguards individuals’ data privacy.

The Norwegian Data Protection Authority offers guidance in dialogue with the participants. The conclusions drawn from the projects do not constitute binding decisions or prior approval. Participants are at liberty to decide whether to follow the advice they are given.

The sandbox is a useful method for exploring issues where there are few legal precedents, and we hope the conclusions and assessments in this report can be of assistance for others addressing similar issues.